Yesterday, Anthropic published an amazing study—they developed a system called Clio, which can safely analyze millions of conversations with the AI assistant Claude.

A New Perspective on AI Usage

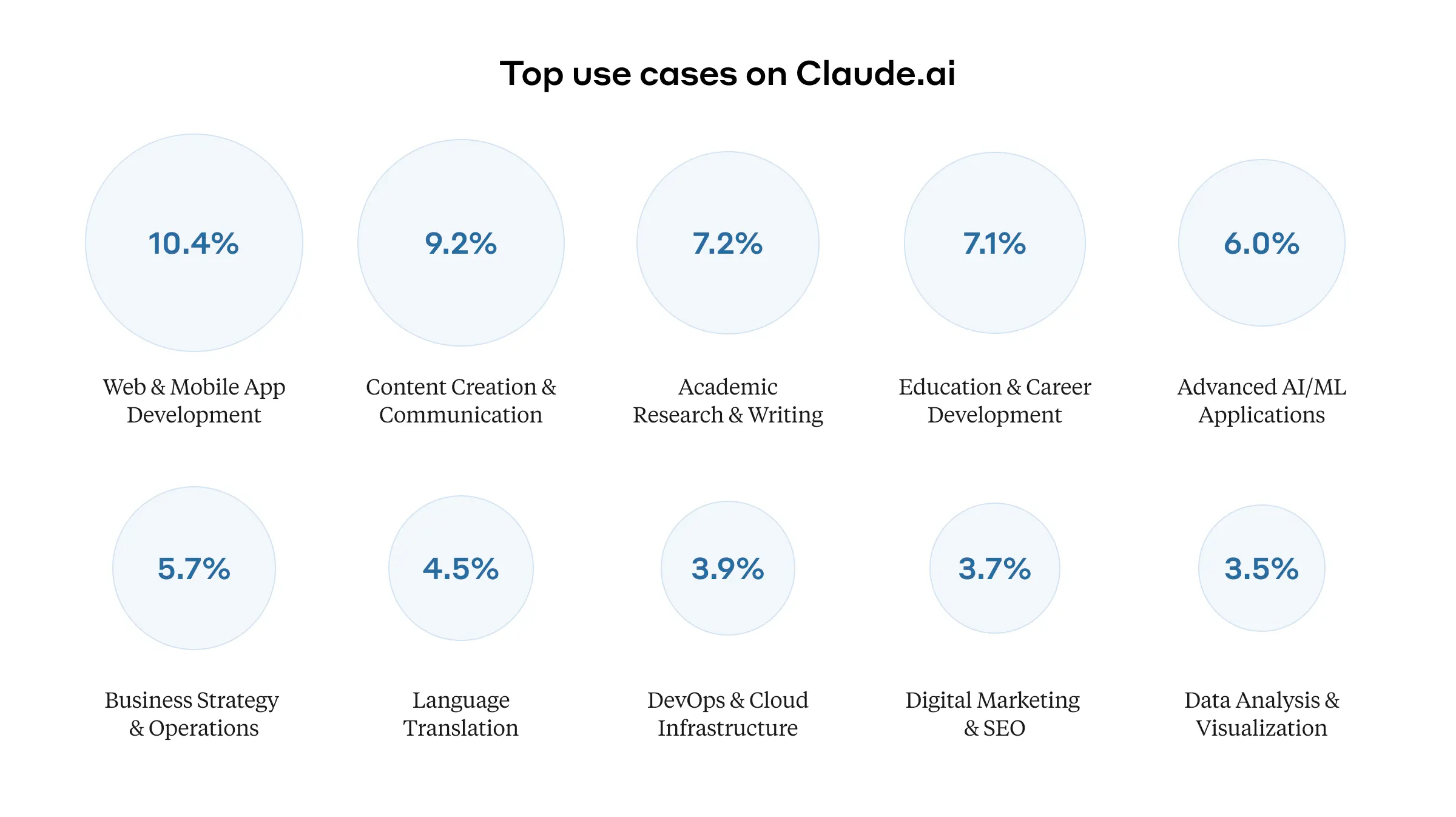

Clio allows for examining real-life scenarios of AI usage in everyday life while preserving user privacy by working only with aggregated data. Anthropic has revealed fascinating trends:

- Most popular tasks: Users primarily rely on AI for programming, content creation, and research.

- Cultural differences: AI usage varies by region; for instance, in Japan, discussions often center on societal challenges like aging populations.

- Enhanced security: Clio’s analysis uncovered new ways people attempt to misuse AI, enabling developers to strengthen safeguards.

This system functions much like Google Trends, but for conversations with AI, identifying patterns, anomalies, and trends without exposing personal user data. It’s a breakthrough in understanding how people truly engage with AI systems.

How Anthropic’s Clio works

At the foundation of Clio is a multi-level data processing pipeline, featuring:

Feature extraction

Clio uses Anthropic’s LLMs to:

- Analyze individual dialogues and extract parameters like language, topic, and user intent.

- Work with direct metrics (e.g., dialogue length) and perform semantic analysis for deeper insights.

Intelligent clustering

To make sense of massive amounts of data, Clio:

- Applies embedding-based clustering techniques to group similar conversations.

- Utilizes k-means with dynamic optimization to determine the ideal number of clusters.

- Builds a hierarchical structure of usage patterns, offering a nuanced understanding of how people use AI.

Privacy protection

User privacy is central to Clio’s design. The system:

- Implements multi-level filtering to remove personal data.

- Aggregates information only when there are sufficient similar cases, ensuring no identifiable data is exposed.

- Runs automatic checks to verify the absence of sensitive information.

Why this matters

Clio is more than just a tool for analysis. It represents a fundamental shift in how we approach AI development:

- Unprecedented scale: For the first time, we have a clear picture of AI usage across millions of conversations.

- Privacy-preserving insights: Clio balances powerful analytics with robust user privacy protections.

- Future-proof foundations: By using AI to analyze AI, Anthropic has laid the groundwork for safer, more ethical AI systems.

The research marks an important step in the development of transparent and ethical methods for analyzing AI systems, combining advanced machine learning technologies with principles of privacy protection.

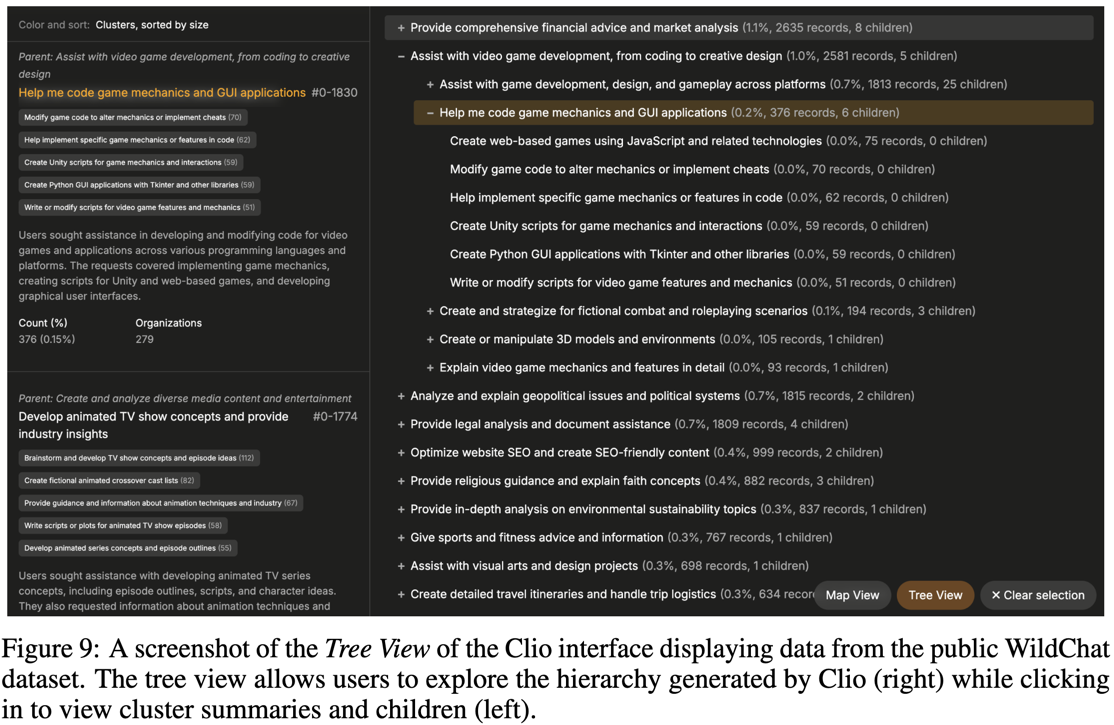

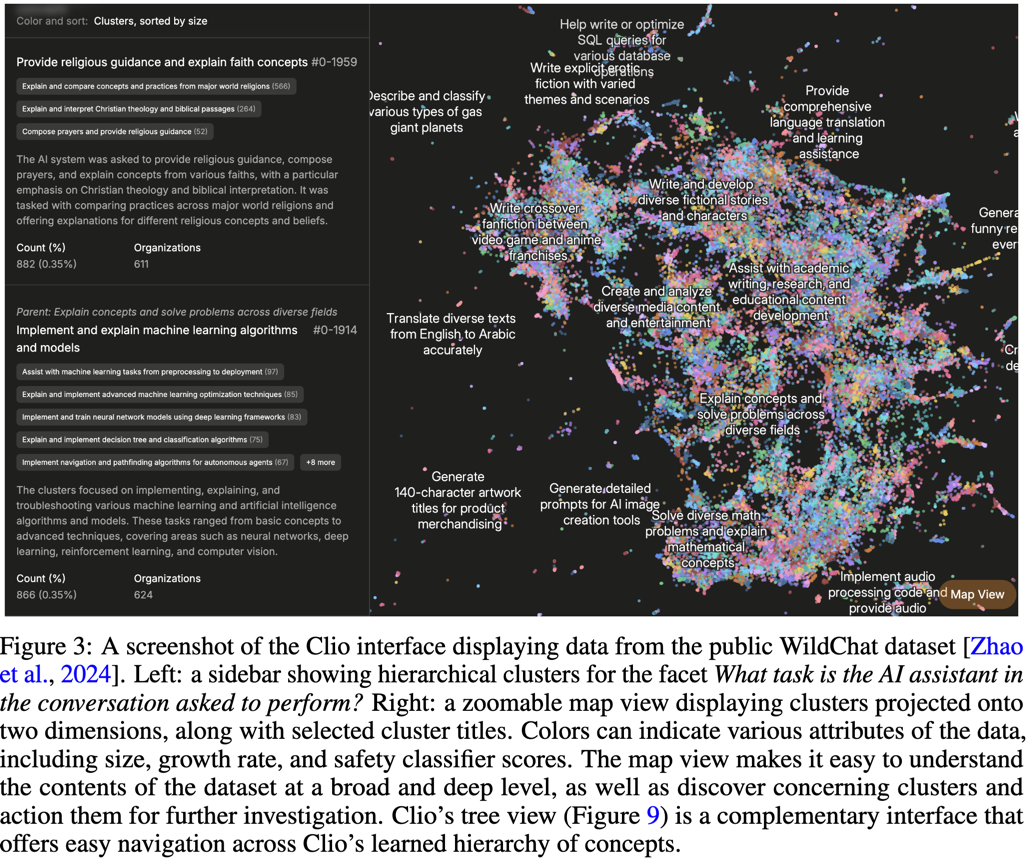

How Clio system visualize the data

Here’s the screenshots of Clio’s UI found in the full research paper:

Tree view of clusters:

Map view of clusters:

Read more

I recommend you to check out their announcement post and full research paper with details on how they implemented Clio system.

Anthropic blogpost: link

Full research paper: link